UNC Charlotte (2009-Present)

Dissertation: Explorability, Satisficing, and Satisfaction in Parameter Spaces.

To understand my research, one must first understand the Tyranny of Choice, a well-studied phenomenon where people can become overwhelmed by a large number of choices. When there are more choices than can be feasibly explored, the user will go with the best option they have seen. I call this exploratory satisficing. There are very few published studies on how interface design can influence exploratory satisficing behavior. This is surprising given that research show these behaviors influence people’s sense of time, perceived tradeoff between cost and reward, and overall life satisfaction.

In my dissertation, I focus on the impact of interaction and design affordances in software applications where users will need to explore fairly large sets of variables (e.g., colors, image parameters) when generating creative content. Because measuring the success of generating “creative content” goes beyond typical usability measures, I am employing metrics from behavioral economics (i.e., maximizations scale), creative cognition (i.e., creativity support index), and an exportability metric I have developed for my studies. In one study, I look at the effect of variations in the interaction technique (single vs. dual cursor) used to navigate color space. In another study, I look at variations in the granularity of control (course vs. fine) when adjusting image parameters (e.g., brightness) and filters (e.g., blur). When complete, my work can help researchers better understand exploratory satisficing. For some users, not exploring additional options could leave them feeling like they could potentially be missing out on the discovery of more desirable possibilities. Additionally, more options means these users could spend excessive amounts of time searching through the design space, hoping they have not missed anything useful or interesting.

Tangible Creativity

A collaborative project between the HCI Labs at UNC Charlotte and the University of Maryland, in cooperation with KidsTeam.

A major goal of this project is to develop a better understanding of the relationships between the design features of tangibles, the gestures used when thinking about creative tasks, and creativity. The project explores correlations between basic categories of gesture, perception, and cognition as a basis for the development of sustainable principles that may inform and guide the development of new technology beyond the current implementation of TUIs.

Publications:

Clausner, T., Maher, M.L, and Gonzalez, B. 2015. Conceptual Combination Modulated by Action using Tangible Computers. In Proceedings of the 37th Annual Meeting of the Cognitive Science Society (Member Abstracts). COGSCI 2015. 2873

Maher, M.L, Clausner, T., Gonzalez, B., and Grace, K. 2014. Gesture in the Crossroads of HCI and Creative Cognition. In Proceedings of the Workshop on Gesture-based Interaction Design at CHI 2014.

Maher, M.L, Gonzalez, B., Grace, K, and Clausner, T. 2014. Tangible Interaction Design: Can we design tangibles to enhance creative cognition? In Proceedings of International Conference on Design Computing and Cognition, DCC 2014. 45-46

SonicExplorer

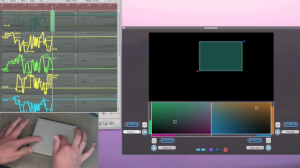

SonicExplorer allows for the control of four different sound parameters using two fingers on a laptop trackpad. SonicExplorer uses MIDI to integrate with existing Digital Audio Workstations, allowing users to simultaneously manipulate controls like attack, reverb, delay, and pitch. The parameters are displayed in SonicExplorer using Geospectral Metering. Participants manipulate a pre-generated score, with the capacity to adjust the tonal quality of the score to levels that make it distinct from the original source.

The GeoSpectral Meter provides multi-sensory exploration of the combined parameter spaces, i.e., the possible combinations of the current sound controls. The controllable rectangle portion of the GeoSpectral Meter supports the user control of multiple parameters by providing a perceptual integration of what the two hands are doing. The users do not have to focus strenuous amounts of their attention on their hands. Instead, they can focus on both manipulating the rectangle in the UI and listening to the resulting effects on the sound. Users can quickly explore the space of possibilities by putting the rectangle (a useful memory cue) into a wide variety of sizes and positions. Furthermore, this can aid users in exploring combinations of the assignable four parameters with a shorter amount of time than having to use an interface that requires mode-switching between four individual sound control sliders.

In a user study, we received positive feedback from participants. User’s fingers would move in rhythm with the sound they were manipulating, offloading cognition that can be utilized for creative processes. Users found the color mappings played a role in their creative exploration and commented on the relationship between color, sound, emotions, and the UI control.

Videos:

Publications:

Adams, A., Gonzalez, B., and Latulipe, C. 2014. SonicExplorer: Fluid Exploration of Audio Parameters. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI 2014. 237-246.

Gonzalez, B., Adams, A., and Latulipe, C. 2014. SonicExploratorium: An Interactive Exhibit of Sonic Discovery. In Extended Abstracts of CHI 2014. 395-398.

Bimanual Color Exploration Plugin (BiCEP)

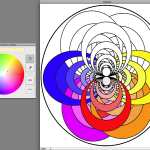

BiCEP is a Mac OS X color picker plugin that enables dual cursor color selection using two fingers on the trackpad. There is no need for custom dual-cursor software, since BiCEP is available to any application that uses the system color picker. BiCEP allows for the traversal of a very specific and unified parameter space, the Hue, Saturation, and Brightness (HSB) color space.

Separate sliders require tedious mode switching between the parameters and inhibit explorability. Unlike other previous dual cursor applications, no additional hardware (i.e., a second computer mouse) is needed. When a user places two fingers on the Apple trackpad, they control two cursors within the plugin. The right cursor, controlled by the index finger of the right hand, moves within the bounds of a color wheel, adjusting the hue and saturation values. The left cursor, controlled by the index finger of the left hand, moves within the bounds of a rectangular bar, adjusting the brightness value. The arrangement of the color wheel on the right and the brightness bar on the left follows a right-handedness mapping, where the non-dominant hand does a less detailed tasks. The dominant hand controls the color wheel which has two spatial dimensions (X and Y), while the non-dominant hand controls the brightness bar which has a single spatial dimension (Y). At the very top there is a color bar that represents the currently selected color, a combination of the hue and saturation of the color wheel with the brightness of the color bar. The magnifying glass at the top is an color eyedropper tool created by the operating system that is a required component of all Mac color picker plugins.

Video:

Publications:

Gonzalez, B. and Latulipe, C. 2011. BiCEP: Bimanual Color Exploration Plugin. In Extended Abstracts of CHI 2011. 1483-1488.

Dance.Draw

The Dance.Draw project is a collaboration at UNC Charlotte, between Dr. Celine Latulipe and Dr. David Wilson in the Software and Information Systems Department and Professor Sybil Huskey from the Department of Dance. This work was supported by NSF CreativeIT Award#IIS-0855882.

In the Dance.Draw project, I worked closely with three different choreographers on eight different interactive dance productions. Early on, I recognized the value of genuine collaboration and avoided treating dancers as research guinea pigs. As a result, I was given the title of “Aesthetic Technologist,” representing my ability to navigate and negotiate between the intentions of the dance, the aesthetic look of the piece, and the goals of the research. The choreographers (and dancers) saw me as active contributors to the dance and I saw the choreographers as experts in movement and how technology should respond to those movements.

External Links:

Publications:

Gonzalez, B., Carroll, E., and Latulipe, C. 2012. Dance-inspired technology, technology-inspired dance. In Proceedings of the Nordic Conference on Human-Computer Interaction, NordiCHI 2012. 398-407.

Latulipe, C., Wilson, D., Huskey, S., Gonzalez, B., and Word, M. 2011. Temporal Integration of Interactive Technology in Dance: Creative Process Impacts. In Proceedings of the 8th Conference on Creativity and Cognition, C&C 2011. 107-116.

Latulipe, C., Wilson, D., Gonzalez, B., Harris, A., Carroll, E., Huskey, S., Word, M., Beasley, R., and Nifong, N. 2011. SoundPainter. In Proceedings of C&C 2011. 439-440.

Latulipe, C., Wilson, D., Huskey, S., Word, M., Carroll, A., Carroll, E., Gonzalez, B., Singh, V., Wirth, M., and Lottridge, D. 2011. Exploring the design space in technology-augmented dance. In Extended Abstracts of CHI 2011. 2995-3000.

Northwestern University (2006-2009)

Virtual Peers

I served as the technology lead for the project “Bridging the Achievement Gap Through Authorable Virtual Peers”. The project focused on the creation of a gender and race ambiguous virtual peer who engaged children by telling stories and building a bridge using Lego Duplo blocks. My work focused on the development of the interface software that controls the virtual agent, the features that make the agent semi-autonomous, and the cognitive models that direct the agent’s and operator’s decisions. I also co-developed and conducted the studies for eliciting culturally authentic style shifting as children engaged in STEM based reasoning with the Virtual Peer. This project is still active and has since moved to the Articulab at Carnegie Mellon.

Publications:

Cassell, J., Geraghty, K., Gonzalez, B., and Borland, J. 2009. Modeling Culturally Authentic Style Shifting with Virtual Peers. In Proceedings of the International Conference on Multimodal Interfaces, ICMI-MLMI 2009. 135-142.

Gonzalez, B., Borland, J., and Geraghty, K. 2009. Whole Body Interaction for Child-Centered Multimodal Language Learning. In Proceedings of the Workshop on Child, Computer and Interaction, WOCCI 2009. Article No. 4.

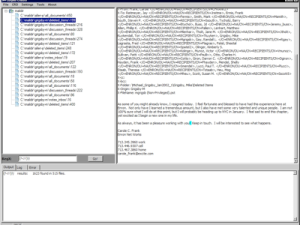

Corpus Cruizer

Corpus Cruizer is a linguistic analysis tool that I designed to perform regular expression pattern matching on large corpora. The immediate goal of the project was to allow one of our researchers to look for subtle paralinguistic cues that may have been used in the ENRON email dataset. The tool was developed using Python and wxPython. I worked on this software when I was a research staff member at the Center for Technology and Social Behavior.

The tool was mentioned in the following publication:

Kalman, Y. M. and Gergle D. (2009). Letter and Punctuation Mark Repeats as Cues in Computer-Mediated Communication. Paper presented at the National Communication Association's 95th annual convention, November 12-15, 2009 in Chicago, Illinois.

ImageMaster and TWDManager

ImageMaster was designed for navigating high resolution images using a mouse. We transferred the interface onto a touch-screen and found many affordance and interaction differences. My contribution to this project was to investigate the affordance and interaction differences between two flash-interfaces that performed similar tasks, but using different modalities. The new navigation interfaces looks like the one pictured. A scroll wheel was used for zooming, buttons become larger, etc.

TWDManager was part of an effort to make a Plone Package that could be used to distribute the Pixzilla Tiled Wall Display system. The goal was to streamline the connection of various systems that are used when creating Tiled Wall Displays: ImageMaster, GeoWall Visualization, Plone Server, etc.

I worked on these projects as an undergraduate working at Academic Technologies, Northwestern University.